Mirage

A Multi-Level SuperOptimizer for Automatically Generating Fast GPU Kernels.

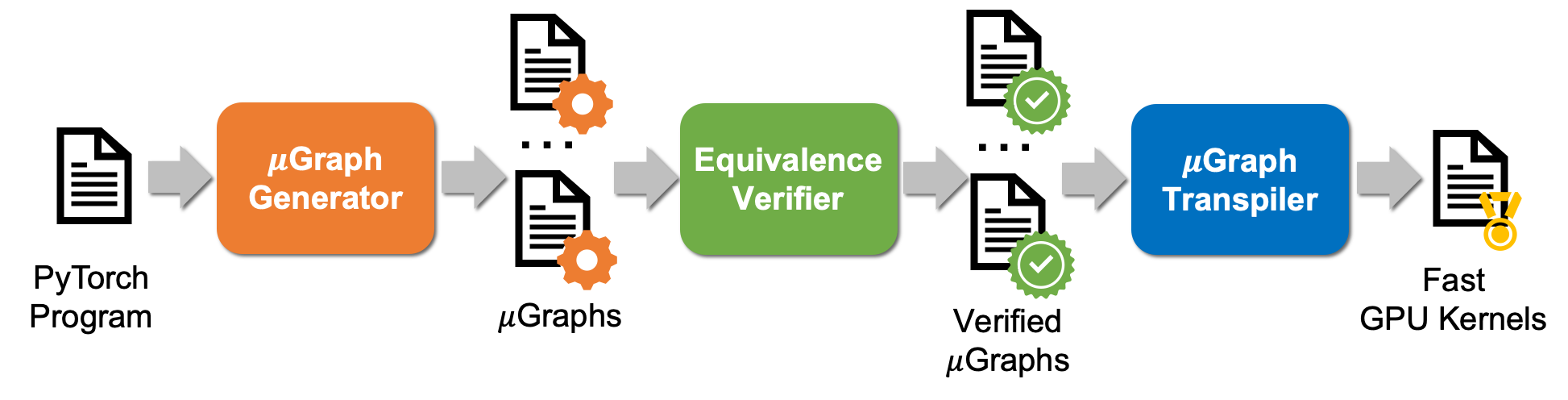

Mirage is a multi-level superoptimizer for automatically generating fast GPU kernels without programming in CUDA/Triton. Compared to traditional CUDA/Triton programming, Mirage represents a significant paradigm shift and offers three major advantages:

-

Higher productivity: Programming modern GPUs requires substantial engineering expertise due to the increasingly heterogeneous GPU architectures. Mirage aims to boost the productivity of MLSys engineers by simplifying this process. Engineers need only specify their desired computation at the PyTorch level; Mirage then takes over, automatically generating high-performance GPU kernels tailored to various GPU architectures. This automation frees programmers from the burdensome task of writing low-level, architecture-specific code.

-

Better performance: Manually written GPU kernels often fail to achieve optimal performance as they may overlook subtle yet crucial optimizations that are challenging to identify manually (see examples in Part 2). Mirage automates the exploration of possible GPU kernels for any given PyTorch program, examining a broad range of implementations to uncover the fastest ones. Our evaluations across various LLM and GenAI benchmarks show that the kernels generated by Mirage are generally 1.2–2.5 times faster than the best human-written or compiler-generated alternatives. Furthermore, integrating Mirage kernels into PyTorch programs reduces overall latency by 15–20%, with only a few lines of code changes required.

-

Stronger correctness. Manually implemented GPU kernels in CUDA/Triton are error-prone, and bugs in GPU kernels are hard to debug and locate. Instead, Mirage leverages formal verification techniques to automatically verify the correctness of the generated GPU kernels.